Welcome to our exploration of Kubernetes architecture! Kubernetes, often abbreviated as K8s, is a powerful tool designed to manage containerized applications in a scalable and automated fashion. This blog post aims to provide a high-level understanding of Kubernetes architecture using an analogy of ships, which simplifies the complex interplay between its components. Special thanks to KodeKloud for their insightful lecture, which inspired this overview.

The Kubernetes Cluster: Ships at Sea

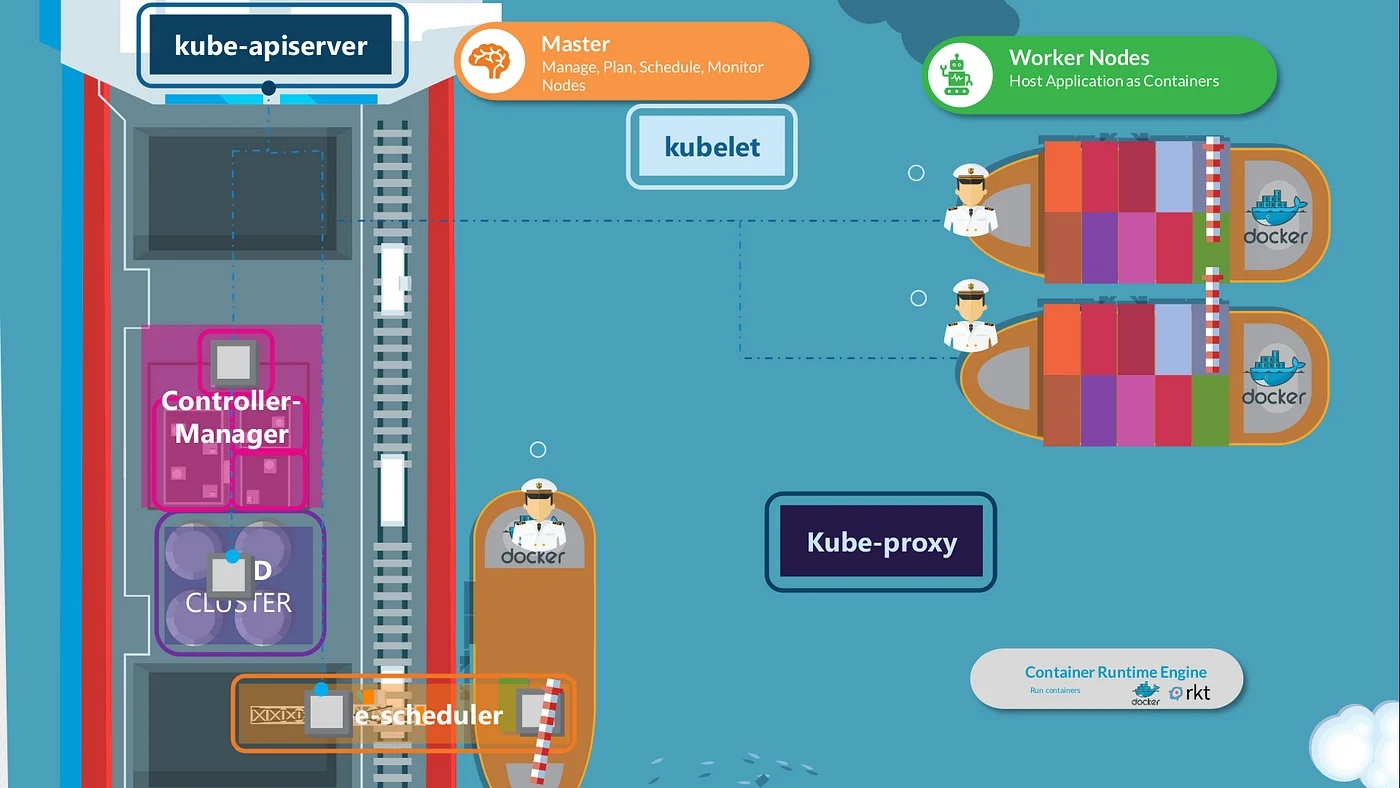

At its core, a Kubernetes cluster comprises two types of nodes: worker nodes and master nodes. To understand their roles, let’s imagine a fleet of ships:

- Worker Nodes (Cargo Ships): These ships carry the actual load—in Kubernetes, this translates to running your containerized applications.

- Master Nodes (Control Ships): These ships oversee and manage the cargo ships, ensuring everything runs smoothly.

Master Nodes: The Control Ships

Master nodes are the brain of the Kubernetes cluster, managing the state and operations of the entire system. They contain several key components, collectively known as the control plane:

etcd: A highly available key-value store that keeps all cluster data. Think of it as the central database that logs the details of every ship and container.

Kube Scheduler: Similar to cranes that load containers onto ships, the Kube Scheduler assigns containers to nodes based on resource requirements, node capacity, and various constraints like node affinity and tolerations.

Controllers: These are specialized offices on the control ships handling specific tasks:

- Node Controller: Manages the state of nodes, ensuring they are operational and adding new ones to the cluster as needed.

- Replication Controller: Ensures the desired number of container replicas are running at all times.

Kube API Server: The central management component that orchestrates all operations within the cluster. It exposes the Kubernetes API, allowing users and controllers to interact with the cluster, monitor its state, and make necessary changes.

Worker Nodes: The Cargo Ships

Worker nodes are where your applications run, managed by two key components:

Kubelet: The captain of the ship, responsible for communicating with the Kube API Server, receiving instructions, and managing containers on the node. The Kubelet also sends status reports back to the master nodes.

Kube-proxy: Ensures smooth communication between containers across different nodes by maintaining network rules on each node. This is essential for services to interact seamlessly, like a web server on one node communicating with a database server on another.

Container Runtime

All nodes, whether master or worker, require a container runtime engine to run containers. While Docker is a popular choice, Kubernetes also supports other runtimes like containerd and CRI-O. This runtime environment is crucial for deploying containerized applications and components.

Conclusion

Kubernetes architecture, with its intricate components and interactions, ensures efficient and scalable management of containerized applications. By likening it to a fleet of ships with dedicated roles and responsibilities, we can better understand the complex but elegant orchestration that Kubernetes provides.

In upcoming posts, we will delve deeper into each component, exploring their configurations and roles in greater detail. Stay tuned as we continue to navigate the fascinating world of Kubernetes!

Credit: This content is inspired by a lecture from KodeKloud.